MLLM as Retriever: Interactively Learning Multimodal Retrieval for Embodied Agents

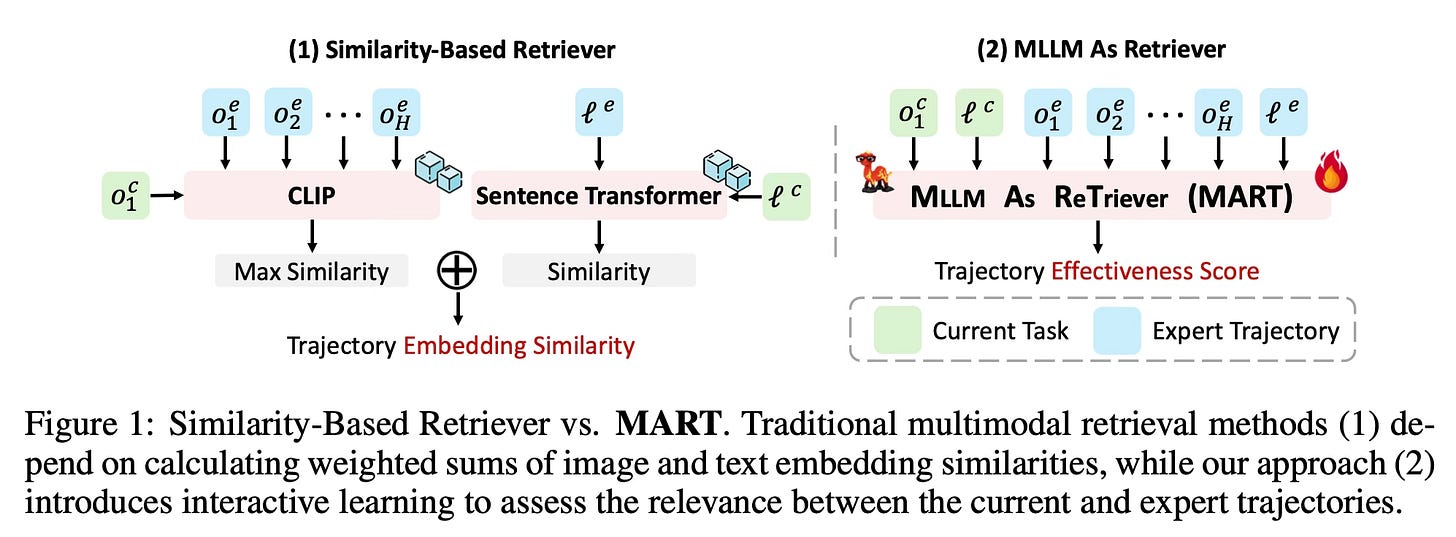

Today's paper introduces MLLM As ReTriever (MART), a new method for enhancing the performance of embodied agents in complex tasks. MART uses interaction data to fine-tune a Multimodal Large Language Model (MLLM) retriever based on preference learning, enabling it to prioritize the most effective trajectories for unseen tasks.

Method Overview

MART integrates interactive learning with the retriever. The method begins by using expert trajectories from training scenarios as prompts for an MLLM agent, allowing it to interact with the environment and collect success rates for different reference trajectories. This interactive feedback data is then organized into preference pairs.

These preference pairs are used to fine-tune an MLLM (specifically LLaVA in this case) with a Bradley-Terry head. This fine-tuning process enables the retriever model to prioritize more effective trajectories for unseen tasks, combining the general capabilities of MLLMs with the ability to assess the task-guiding effectiveness of trajectories.

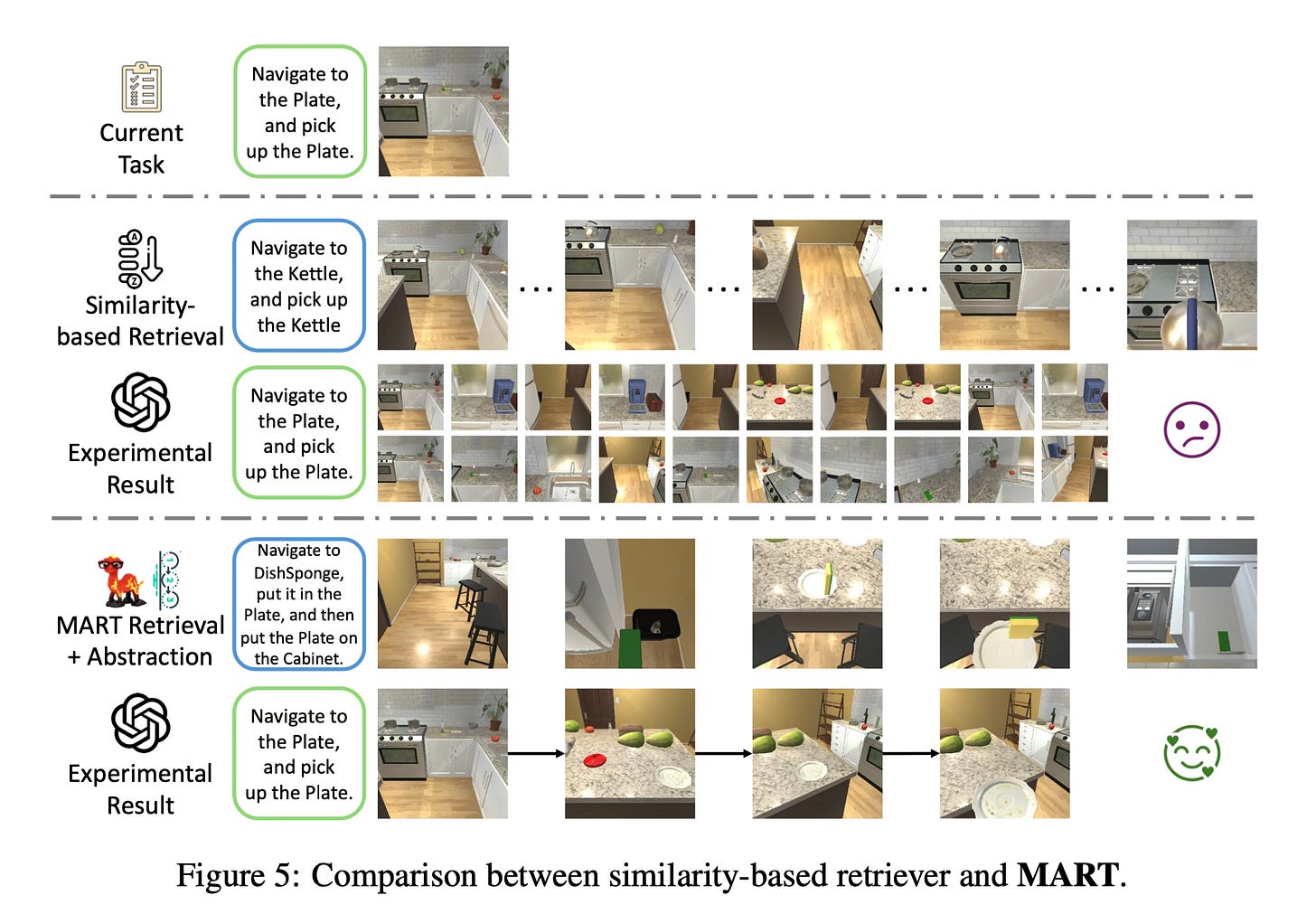

The paper also introduces a Trajectory Abstraction mechanism, which utilizes the summarization capabilities of MLLMs to represent trajectories using fewer tokens while preserving key information. This approach allows agents to better understand important milestones in the trajectory and is particularly useful for long-horizon tasks, as it reduces the required context window length and removes potentially distracting information.

The MART pipeline involves several steps:

Constructing memory databases containing expert trajectories from previous successful task executions.

Using interactive learning to train the trajectory retriever by sampling trajectories and evaluating their effectiveness based on task success rates.

Fine-tuning the MLLM retriever using preference pairs derived from the interactive feedback.

Applying the Trajectory Abstraction mechanism to condense trajectories while retaining essential information.

Using the fine-tuned retriever to select the most effective trajectory for guiding the agent in new tasks.

Results

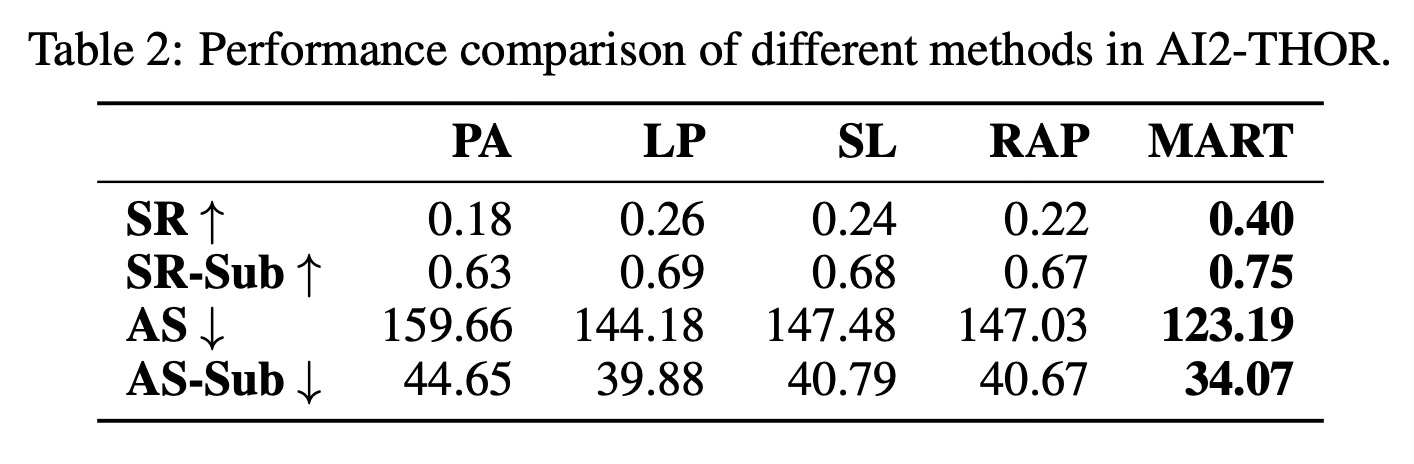

The experimental results demonstrate that MART significantly improves task success rates in unseen scenes compared to baseline methods. The approach consistently outperforms baselines by over 10% across different environments, showcasing its effectiveness in enhancing the performance of embodied agents in complex tasks.

Conclusion

MART fine-tunes a general-purpose MLLM as a retriever capable of assessing trajectory effectiveness. The introduction of interactive learning and the Trajectory Abstraction mechanism enables agents to better leverage past experiences and operate more effectively in unseen environments. This approach shows promise in improving the performance of embodied agents across various complex tasks and environments. For more information please consult the full paper.

Congrats to the authors for their work!

Yue, Junpeng, et al. "MLLM AsReTriever: Interactively Learning Multimodal Retrieval for Embodied Agents." arXiv preprint arXiv:2410.03450 (2024).